Data Science with Python (I)

Posted: 21 May, 2017 Filed under: Data Science Resources | Tags: Anaconda, Data Science Resources, Pandas, Python Leave a commentData Science involves you make smart decisions for big or complex problems. That’s why you need evolved tools to manage this situations.

Python is a perfect tool, it has an incredible number of math-related libraries such as Numpy, Matlab, Pandas or Scikit-learn that reduces your workload.

Next Station in Data Science path: DB NonSQL

Posted: 6 June, 2015 Filed under: Data Science Resources | Tags: Big Data, cassandra, document databases, graph stores, hbase, key-value stores, mongodb, nonsql, rdbms, RMongo, rmongodb, ubuntu, vertica, wide-column stores Leave a commentIn my Data Scientist roadmap one important issue is where to storage the information. You can storage the logs in Hadoop, but this is a first step that I will explain in other post. Then you can imagine that you can storage the info in a database.

Joining concepts like database and Big Data you will obtain a NonSQL best answer. But What is a NonSQL database?

First take a look in Wikipedia: “A NoSQL (often interpreted as Not only SQL) database provides a mechanism for storage and retrieval of data that is modeled in means other than the tabular relations used inrelational databases. Motivations for this approach include simplicity of design, horizontal scaling, and finer control over availability. The data structures used by NoSQL databases (e.g. key-value, graph, or document) differ from those used in relational databases, making some operations faster in NoSQL and others faster in relational databases. The particular suitability of a given NoSQL database depends on the problem it must solve.”

Monte Carlo Method and Pi Day!

Posted: 19 April, 2015 Filed under: Data Science Resources | Tags: Monte Carlo, Number Pi, Ulam, von Neumann Leave a commentMonte Carlo methods (or Monte Carlo experiments) are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other mathematical methods. Monte Carlo methods are mainly used in three distinct problem classes: optimization, numerical integration, and generation of draws from a probability distribution.

In physics-related problems, Monte Carlo methods are quite useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of risk in business and, in math, evaluation of multidimensional definite integrals with complicated boundary conditions. In application to space and oil exploration problems, Monte Carlo based predictions of failure, cost overruns and schedule overruns are routinely better than human intuition or alternative “soft” methods.

The modern version of the Monte Carlo method was invented in the late 1940s by Stanislaw Ulam, while he was working on nuclear weapons projects at the Los Alamos National Laboratory. Immediately after Ulam’s breakthrough, John von Neumann understood its importance and programmed the ENIAC computer to carry out Monte Carlo calculations.

Being secret, the work of von Neumann and Ulam required a code name. A colleague of von Neumann and Ulam, Nicholas Metropolis, suggested using the name Monte Carlo, which refers to the Monte Carlo Casino in Monaco where Ulam’s uncle would borrow money from relatives to gamble. Using lists of “truly random” random numbers was extremely slow, but von Neumann developed a way to calculate pseudorandom numbers, using the middle-square method. Though this method has been criticized as crude, von Neumann was aware of this: he justified it as being faster than any other method at his disposal, and also noted that when it went awry it did so obviously, unlike methods that could be subtly incorrect.

Central Limit Theorem, the heart of inferential statistics!

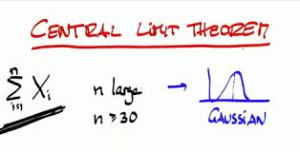

Posted: 19 February, 2015 Filed under: Data Science Resources | Tags: Abraham de Moivre, bean machine, Central Limit Theorem, cholesterol, Exponential Distribution, Francis Galton, Galton box, Moivre-Laplace theorem, Normal Distribution, Pierre-Simon Laplace, quincunx, rexp Leave a commentIn one of my previous post I’ve attached you the following image: https://datasciencecgp.files.wordpress.com/2015/01/roadtodatascientist1.png , in this image we have an interesting roadmap to follow to be an Horizontal Data Scientist.

Then the following station will be do a deep insight in the heart of inferential statistics the Central Limit Theorem.

The central limit theorem has an interesting history. The first version of this theorem was postulated by the French-born mathematician Abraham de Moivre who, in a remarkable article published in 1733, used the normal distribution to approximate the distribution of the number of heads resulting from many tosses of a fair coin. This finding was far ahead of its time, and was nearly forgotten until the famous French mathematician Pierre-Simon Laplace rescued it from obscurity in his monumental work Théorie Analytique des Probabilités, which was published in 1812. Laplace expanded De Moivre’s finding by approximating the binomial distribution with the normal distribution. But as with De Moivre, Laplace’s finding received little attention in his own time. It was not until the nineteenth century was at an end that the importance of the central limit theorem was discerned, when, in 1901, Russian mathematician Aleksandr Lyapunov defined it in general terms and proved precisely how it worked mathematically. Nowadays, the central limit theorem is considered to be the unofficial sovereign of probability theory. (Source: Wikipedia)

Turning back to reality, the Central Limit Theorem tells us that, for a reasonable size n, the sampling distribution (the distribution of all the means of all the possible samples of size n) is approximated by a Normal curve whose mean is mu, the mean of the population, and whose standard deviation is the standard deviation of the population divided by the square root of the sample size, n.

Read the rest of this entry »

Read the rest of this entry »

Storytelling – Kaggle Titanic Competition

Posted: 7 February, 2015 Filed under: Sin categoría 3 CommentsAs I told you in the first post I’d like to do some Competitions as my level increased. Now is time to start my Kaggle Competitions. Titanic, Machine Learning from disaster is one of the most helpful Competitions to start learning about Data Science. In this Kaggle page you will find a lot of help and you can learn how to start with different kind of languages Python, Excel and R, well in this blog I will do some Storytelling using R, concretely with Random Forest and Boosting. In near future I will post in comments or other posts more algorithms like Neural Network or SVM to solve this or other Competitions. But, what we know about the Titanic Disaster?  Read the rest of this entry »

Read the rest of this entry »

The amazing Genetic Algorithms!

Posted: 31 January, 2015 Filed under: Data Science Resources | Tags: Charles Darwin, Genetic Algortihm, John Holland, John Koza, Neural Network, Swarm Intelligence 6 CommentsWhy do we say Data Science? is this a part of the Science? if you think as an Statistics or Engineer it’s difficult to understand.

One of the most beautiful things in the earth is the nature. We don’t think about that but we are rounded about nature inspired objects, for example planes (like birds), buildings (like hives) or submarines (like whales). When we talk about the Computer World we have also looked at the nature world to learn how to find the best solutions to the most difficult problems.

In the Data Science Universe, more concretely in the algorithm side, we have interesting nature oriented solutions from fields like Neural Networks or Genetics or Swarm Intelligence.

Is really interesting thinking in how to find algorithms that emulate Neural Networks to solve daily problems we have, or look at the bees or ants to use Swarm Intelligence and replicate this behaviour to apply solutions in Healthcare or Public Administration to improve the quality of live of the people. This is my main objective try to give VALUE to the society and through the Data Science Universe I believe that it’s a reality!

Here you find examples of biological systems that have inspired computational algorithms.

Also in this blog you find more detail with examples around Algorithms in Nature.Turnig back to this post I focus in the Genetics Algorithm, in other posts I will talk about other Nature Algorithms.

Computer science and biology have enjoyed a long and fruitful relationship for decades. Biologists rely on computational methods to analyze and integrate large data sets, while several computational methods were inspired by the high-level design principles of biological systems.

Biologists have been increasingly relying on sophisticated computational methods, especially over the last two decades as molecular data have rapidly accumulated. Computational tools for searching large databases, including BLAST (Altschul et al, 1990), are now routinely used by experimentalists. Genome sequencing and assembly rely heavily on algorithms to speed up data accumulation and analysis (Gusfield, 1997; Trapnell and Salzberg, 2009; Schatz et al, 2010).

Computational methods have also been developed for integrating various types of functional genomics data and using them to create models of regulatory networks and other interactions in the cell (Alon, 2006; Huttenhower et al, 2009; Myers et al, 2009). Indeed, several computational biology departments have been established over the last few years that are focused on developing additional computational methods to aid in solving life science’s greatest mysteries.

But, What is a Data Scientist?

Posted: 17 January, 2015 Filed under: Data Science Resources | Tags: Data Science Resources Leave a commentI have started explaining how to become a Data Scientist, but … What is a Data Scientist? Is there an Official University or Professional career with this name? Do you need a Certification to show your skills as a Data Scientist? What are the main skills that you need to be named as a Data Scientist?

Data Scientist is one of the latest emerged jobs and maybe the sexiest job of the century as you can see in detail in this Forbes article.

A really funny description of Data Scientist I have founded in a tweet: “Person who is better at statistics than any software engineer and better at software engineering than any statistician”.

Then, do you need to study Statistics? Do you need to study also Software Engineering? Is there a career about Data Science?

There are a lot of courses, masters and technical trainings you can find in internet. For example in Barcelona there is an interesting Data Science Master awarded from the Graduate School of Economics, the University Autonomy of Barcelona and the Pompeu Fabra University. These universities understand that this program will be for:

- Graduates in Economics and Business with solid background and keen interest in quantitative methods

- Graduates in Statistics, Mathematics, Engineering, Computer Science, and Physics with the ambition to work with real-world problems and data

- Programming professionals who want to acquire analytical, quantitative tools to leverage their experience

- Aspiring PhD students looking for rigorous training in quantitative and analytical method

How to become a Data Scientist

Posted: 2 January, 2015 Filed under: Data Science Resources Leave a commentWhen I was studying Statistics I though that this was the Degree with more opportunities in the future. But when I started working I saw that maybe I was wrong. In fact my first work was as a Java Junior Developer, nothing to do with my studies. More or less 15 years later this could have changed.

The evolution of the Technology has increased exponentially the power of the computers. To be simple, in the area of Data Management, computers let us two main things:

- One is store all data, here the challenge is clear if we think that every 60 seconds Google receives over 4,000,000 search queries, YouTube users upload 71 hours of new videos, Pinterest users Pin 3,472 photos, Facebook users share, 2,460,000 pieces of content and Twitter users share 277,000 tweet (Infographic How much Data is Generated every minute).

- And the second, now we can explode this data applying all kind of new and advanced data management techniques like algorithms for predicting patterns or using parallel processing with Terabytes of data to extract and process the valuable information. For example we can talk about Genetics Algorithms (GA) that use the nature to find the best solutions, you can find one simple exercise of GA in the R-bloggers site using R.

The evolution of the Technology let us connecting all kind of devices with sensors, and these sensors transmit all kind of data through internet to act depending of the data processed. This is Internet of the Things or IoT, in Europe there are some initiatives that promote the IoT world with lots of resources, guides, subventions, … (Internet of the Things Europe Initiatives). These connections will produce huge quantities of Data then we will need Petabytes of storage, the best Computer performance and the most advanced Applications to process the Data. Here again the two constants: storage and the data management techniques.

Thanks to this evolution we are changing the world of the Public Sector applying this tech to Smartcities, eHealth, Agriculture,etc. In the case of Smartcitiy we can find Barcelona which is the first in Spain and the fourth in Europe with projects like Intelligent Traffic Lights or Apps4bcn.

Thanks to my past as Statistician and the new era of Data Management I have started a new hobby several months ago “Data Scientist”. My curiosity started in Coursera with this course about Machine Learning done by one of the Co-Founders and Chairman of Coursera the Data Scientist Andrew Ng.This course was a little bit intensive for me and I couldn’t dedicate the time you need for learning this fantastic material, I hope turn back in the mid future.

Recent Comments